Member-only story

Privacy risks of ChatGPT

Implicit privacy risks of Chatbots / Natural Language Queries

Introduction

With ChatGPT, we are seeing a resurgence of Chatbots. I have previously written on the enterprise applications of ChatGPT, and similar Large Language Models (LLMS) — article.

We can only expect this adoption to grow in different verticals, e.g. Customer Support, Health, Banking, Dating; leading to the inevitable harvesting of queries posed by the users as a ‘source of personal data’ for Adverting, Phishing, etc. scenarios.

While most users are sufficiently aware of the privacy risks to not share explicit Personally Identifiable Information (PII), e.g., credit card numbers, bank account details, health conditions;

it is more the implicit privacy risks of Natural Language Queries (along the lines of side-channel attacks) that we highlight in this article.

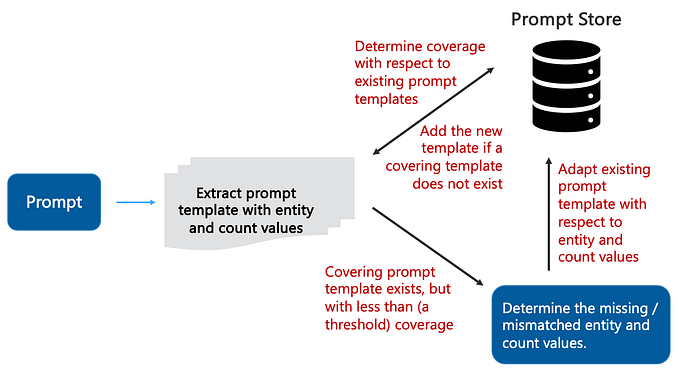

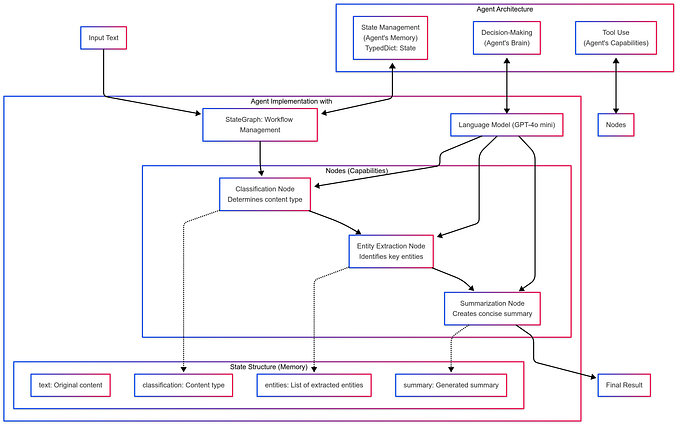

We propose a solution in the form of a user (chat client) module that intercepts the user (natural language) query and leverages the same “generative model” of ChatGPT to a generate a privacy preserving variant of the original user query.

Privacy Risks

For example, let us consider the two use-cases below to understand the significance of such implicit privacy risks: